TRITON — Multisensory Based Underwater Intervention through Cooperative Marine Robots

Global Objectives

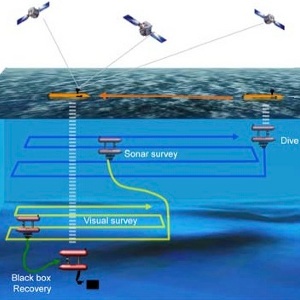

The TRITON project proposes two scenarios as a proof of concept to demonstrate the developed capabilities: (1) the search and recovery of an object of interest (e.g. a black box) and (2) the intervention of an underwater panel in a permanent observatory.

The first mission scenario will be divided in several sub-tasks. First, the mission will begin with the deployment of the AUV and the intervention I-AUV, which should then adopt and maintain a safe formation that enables acoustic communication and absolute positioning of both vehicles. Then, a sonar survey will be carried out by the AUV to detect the signal emitted by a pinger in the black box. The detection of such signal will be followed by a second survey, whose objective is to create a photomosaic of the area, making possible to visually identify the object. Finally, the AUV will be sent back to perform the intervention task to recover the object.

The second mission scenario will also begin with the deployment and formation of both marine robots in order to establish communications and absolute positioning. Then, the AUV will use acoustics to interrogate a transponder mounted in the observatory with the objective to guide the vehicle transit to the panel. When visual contact with the objective is established, the AUV will approach the panel using visual servoing. The final part of the docking operation will involve a mechanism to rigidly attach the vehicle to the panel. After this, the manipulation will take place. Two demonstrative applications are foreseen: Opening/closing a valve, and connecting/disconnecting a cable.

Need for coordination and added value of the coordination

The TRITON coordinated research project aims the use of autonomous vehicles to execute complex underwater intervention tasks. The project focuses on the coordinated operation of multiple vehicles executing a cooperative mission, on increasing the dexterity of the hand-arm system available in the I-AUV, and on the improvement of the sensorial capabilities needed in all stages of the missions. Accordingly, TRITON has been broken down into 3 subprojects: COMAROB, responsible for the cooperation of two marine robots; VISUAL2, in charge of the visual mapping and the optical object identification; and GRASPER, responsible for the multisensory based autonomous manipulation.

Specific Objectives of Each Subproject

The “COMAROB” subproject (UdG) has three main goals: 1) to consolidate the systems developed so far, to safely bridge the gap between water tank experiments and open sea trials; 2) to extend the system’s architecture, currently being used for the NGC (navigation, guidance and control), obstacle avoidance, path planning and mission control of each vehicle, to enable cooperation among themselves; and 3) to carry out SLAM-based acoustic mapping while simultaneously using cooperative navigation for ground truth.

The main goals assumed by the “GRASPER” subproject (UJI) will cover the main aspects related to the mission specification by the user and the process of robotic manipulation: (1) development of the user interface and simulation capabilities needed for the complete project, improving the initial interface developed through the previous project; (2) design and integration of a more capable hand-arm system than the previously developed within the RAUVI project. For this project, we aim to build a more advanced gripper that can be used for different tasks, and to improve the grasping/manipulation functionalities by means of a better multisensory integration; (3) development and application of new methods for underwater manipulation that go beyond the current state of the art. This task will be in charge of planning the intervention mission details and to perform them in realistic conditions. For that, a new grasp planner will be developed making use of range and visual information provided by the I-AUV sensors.

The VISUAL2 subproject (UIB) will focus on: (1) enhancing the vehicle self-localization performance using SLAM-based strategies fed with stereo images and acoustic information, taking into account the special features of the underwater scenarios and the intended missions of TRITON; (2) development of 3D safe path planning algorithms and obstacle avoidance strategies; (3) development of a laser and video fusion framework to extract enhanced structural information of the scenes of interest and the objects to be manipulated.

Project Leader

Project Collaborators

-

Alberto Ortiz Rodriguez

-

Yolanda González Cid

-

Javier Antich Tobaruela

-

José Guerrero Sastre

-

Antoni Burguera Burguera

-

Francisco Bonnín Pascual

-

Emilio García Fidalgo

-

Miquel Massot Campos

Reseacher at Ocean Infinity

-

Pep Lluis Negre Carrasco

Researcher at ROVCO Ltd

-

Stephan Wirth

Senior Roboticist at Savioke

Related Publications

-

I-AUV Docking and Panel Intervention at Sea

Sensors

-

Robust WorldCentric Stereo EKF Localization with Active Loop Closing for AUVs

Pattern Recognition and Image Analysis

-

Intervention AUVs: The Next Challenge

Annual Reviews in Control

-

Structured light and stereo vision for underwater 3D reconstruction

MTS/IEEE Oceans

-

Stereo SLAM for Robust Dense 3D Reconstruction of Underwater Environments

MTS/IEEE Oceans

-

Side Scan Sonar images based SLAM

-

Intervention AUVs: The Next Challenge

International Federation of Automatic Control World Congress (IFAC-WC)

-

Trajectory-Based Visual Localization in Underwater Surveying Missions

Sensors

-

Inertial Sensor Self-Calibration in a Visually-Aided Navigation Approach for a Micro-AUV

Sensors

-

Visual Sensing for Autonomous Underwater Exploration and Intervention Tasks

Ocean Engineering,

-

Stereo EKF Pose-based SLAM for AUVs

Open German-Russian Workshop on PATTERN RECOGNITION and IMAGE UNDERSTANDING

-

Multipurpose Autonomous Underwater Intervention: A System Integration Perspective

20th Mediterranean Conference on Control & Automation (MED)

-

SSS-SLAM: An Object Oriented Matlab Framework for Underwater SLAM using Side Scan Sonar

XXXV Jornadas de Automática

-

Intensity Correction of Side-Scan Sonar Images

Proceedings of the 19th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA 2014)

-

LSH for Loop Closing Detection in Underwater Visual SLAM

Proceedings of IEEE International Conference on Emerging Technologies and Factory Automation

-

Reducing the computational cost of underwater visual SLAM using dynamic adjustment of overlap detection

Proceedings of IEEE International Conference on Emerging Technologies and Factory Automation

-

Stereo Graph-SLAM for Autonomous Underwater Vehicles

Intelligent Autonomous Systems 13 (Proceedings of the 13th International Conference on Intelligent Autonomous Systems IAS13)

-

Towards Robust Image Registration for Underwater Visual SLAM

Proceedings of the International Conference on Computer Vision, Theory and Applications (VISSAP)

-

Underwater Visual SLAM using a Bottom-Looking Camera

-

Vision-based Mobile Robot Motion Control Combining T2 and ND Approaches

Robotica

-

Evaluation of a laser based structured light system for 3D reconstruction of underwater environments

Martech 2013 5th International Workshop on Marine Technology

-

Visual Odometry for Autonomous Underwater Vehicles

Proceedings of the IEEE/MTS Oceans Conference

-

Multisensor Aided Inertial Navigation in 6DOF AUVs using a Multiplicative Error State Kalman Filter

Proceedings of the IEEE/MTS Oceans Conference

-

The UspIC: Performing Scan Matching Localization Using an Imaging Sonar

Sensors

-

New Insights on Laser-based Structured Light for Underwater 3D Reconstruction

-

Revisiting Image Vignetting Correction by Constrained Minimization of Log-Intensity Entropy

Advances in Computational Intelligence

-

6DOF EKF SLAM in Underwater Environments

-

Visual Odometry Parameters Optimization for Autonomous Underwater Vehicles

Fifth International Workshop on Marine Technology